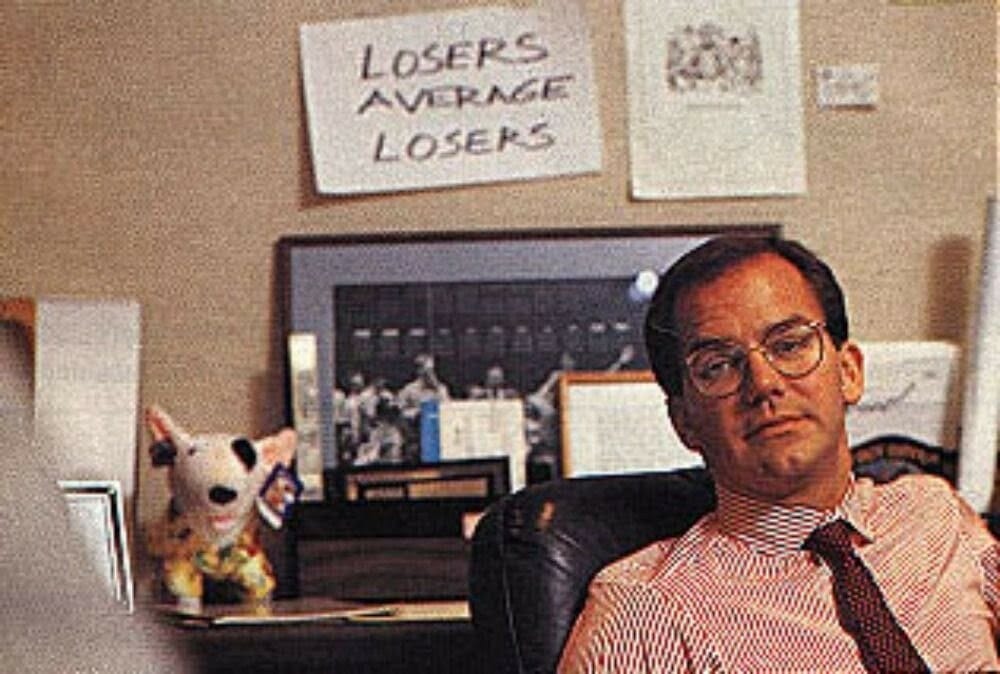

Losers Average Losers

Or, Win Big At Gambling And Markets With This One Weird Trick!

Longtime readers of my Twitter feed will be aware that one of my favorite pieces of advice is the classic maxim “losers average losers”. Failure to adhere to this simple piece of advice is probably the #1 reason why investors of all skill levels, ranging from a newly minted Robinhood user long 1 share of GME all the way up to Bill Miller owning close to 10% of Freddie Mac, experience catastrophic losses and permanently impair their capital. Even I am not immune to this, and blindly average down in a rush to capitalize on a “good price” is responsible for my worst personal investment loss ever.

However, while this maxim certainly feels true to many market participants, it’s less than clear precisely why it’s true. Recently, I was noodling around in Mathematica on an unrelated problem, and stumbled upon a nifty little heuristic proof of why this works, and I figured it was intriguing enough to warrant resurrecting my blog from the dead (thankfully, it hasn’t been 200 years yet!) to share with those who may be interested. I’m not aware of any other instance of this particular logic being used, but if there is a paper or other article on this, please let me know and I’ll link it here!

For the rest of the post, I will reproduce and explain the derivation here in some detail, so do be aware that there is math- more math than my last post- in this blog. Substack has given me the ability to write in LaTeX, and I absolutely will abuse this feature to the fullest extent permitted.

Author note: Substack mobile (and possibly email) seem to be hit-or-miss at rendering LaTeX correctly- it is a beta feature. If you are having trouble reading equations, please view this post on a desktop instead!

Plenty of people have written more or less ad nauseum about the applicability of the Kelly Criterion in finance, and I am on the record as saying that most of the time, that’s stupid. There certainly are a few instances where it’s warranted (such as, well, warrants), but in general one should regard such prognostications with suspicion, especially when associated with continuous and highly uncertain outcome distributions like “the returns of the S&P 500”, where it’s essentially useless. Of course, *I* get to make these comparisons, and it’s fine, because shut up, that’s why.

A brief mathematical refresher, for the uninitiated: the Kelly criterion is a method for optimally sizing a gamble under uncertainty. It is invariant to level of wealth, and as a result assigns a specific fraction of your total portfolio to each individual bet, with the ultimate goal of maximizing the expected logarithm of ending wealth. For the choice between a single binary $1 bet that pays out X dollars with probability p and $0 with probability 1-p and an allocation to cash, this corresponds to the following expression (neglecting interest rates, etc.):

A little calculus and some simplification- I won’t bore you with this, but go ahead and check my work if you want to verify it- gives the expression for w*:

Astute observers will immediately note that if p = 1/X (the bet is fair), then w* = 0 and we should not bet anything. If p > 1/X, then w* > 0, and if p < 1/X, then w* < 0. In roulette, for example, p(Red) = 18/38, but X = 2, so w* = -1/19, which means that the way to win big at the roulette table is to open a casino running them instead of playing at one (this is good advice in general when it comes to table games).

Readers of this Substack, of course, are far too classy to lose money in Vegas or Monte Carlo; as gentlemen and ladies of leisure, they prefer to lose their money in the financial markets. There’s quite a few instances of this phenomenon in markets- binary options, litigation outcomes, debt in deeply distressed companies, but the canonical example is biotech stocks, so that’s what we’ll use here1. Just like the gambling example above, this is perfectly generalizable to both long and short positions, but we’ll focus just on the long side for simplicity- it’s all the same, mutatis mutandis.

Consider a pre-phase 1 biotech currently trading at a $500 million market cap. The sell-side reports (which are surely objective and correct and not at all currying favor for the inevitable future offering) suggest a terminal value of $10 billion in the event it succeeds in its eventual phase 3 trial, so we will assume that if this occurs your investment will pay off 20:1. In the event it fails somewhere along the way, executives will steal all your money and you will baghold the stock to 0 and lose your entire investment smartly generate a tax loss from a worthless security.

Because you are very clever, you spend your time reading all the relevant scientific literature (and spend your investor’s money on expert network calls to various doctors and PhDs), and you conclude that the drug mechanism seems much more plausible than the market thinks- in fact, you believe there’s a 10% chance that it passes all the trials and your bet pays off. The Kelly optimal payoff is easy to calculate in this case: (0.1*20-1)/(20 - 1) = 1/19, so you should put about 5.2% of your portfolio into this long-shot bet, since the odds are in your favor and the payoff is so substantial.2

At this point, I’d like to take a brief detour into Bayesian statistics (it will be brief, I promise). You have probably seen Bayes’ theorem before, written something like this:

We won’t be using this formula, because I hate it for reasons of aesthetic crankery. Instead, we will use a slightly different formula we get by rearranging terms:

The Bayes Factor term is a ratio of the likelihoods of the evidence, and so gives a way to evaluate the “strength” of the information we have available to us: the higher it is, the more we shift our posterior odds away from the prior odds, and vice versa. For example, say I flipped a count 10 times. Your prior odds, assuming the coin is fair, would be 1: it’s equally likely to be heads or tails. If I got 10 heads on my flips, you might suspect that I actually had a coin with two heads, and the bayes factor would be the ratio of the probability I got 10 heads with a fair coin (p = 2^-10) vs. 10 heads with a coin that only came up heads (p = 1). Thus, your posterior probability that the coin is fair would be about 0.0975%, and you would be quite confident I used a two-headed coin and quite disinclined to bet tails would appear on the next flip.

Importantly, the transformation we have done into log odds space means that the impact of the same amount of evidence gets the correct nonlinear adjustment across the entire probability space: if we initially have a 1% chance or a 50% or a 99% chance of an event occurring, an extra bit of evidence that event will occur will correctly take us to 2% or 66% or 99.5%, in a way that dealing with raw probabilities won’t.

In our example above, the “prior odds” are given by the market: 5%, and our posterior odds by our own back of the envelope vibes, so we can work backwards to calculate the effective Bayes Factor of our beliefs as follows:

At this point, let’s introduce some standard notation to make our lives easier. The logit function transforms probabilities to log odds, and the logistic function turns log odds into probabilities. They’re defined as follows:

We could just have easily written the above expression as:

and that’s how we’ll be writing things going forward. Alright, that’s enough of that, let’s return back to our original example before this turns into LessWrong.

Ok, so we’re chugging along now, with 5.2% of our portfolio in this fantastic speculative investment, when suddenly we hit a snag. The company publishes the highly anticipated preliminary readout of their initial trials, and they’re not as great as the market hoped. The stock immediately falls 50%, as other investors revise down their probability of success by a factor of 2, to 2.5% instead of 5%. What does your portfolio look like now? Well, your investment is now only worth 1/2 of 1/19th of your portfolio, so it’s 1/38th of your initial wealth, and you’ve lost the other 1/2 of 1/19th of your portfolio, so you now have 37/38ths of your initial wealth, so currently 1/38/(37/38) = 1/37th of your portfolio is in this name.

That’ll sting, and your monthly letter will be a bit awkward to write, but whatever, you’ve lost more and bounced back- perhaps it’s time to average down. Based on your research, you’re still just as relatively confident as you were before- even if you don’t think the odds are 10% anymore, you still believe the your Bayes Factor is 2.111. So, what is your new expected probability of success? Because of the convexity of the logarithm, you don’t also revise down your estimate by a factor of 2 to 5%, but rather to:

Also, since the market cap has been cut in half but our success outcome is unchanged (it’s just less likely now), the expected return of success has now doubled to 40x from 20x. Thus, our new Kelly fraction is:

CURIOUS. Our optimal Kelly fraction is precisely the same as our current position after taking the loss! As it turns out, we should do nothing to change the size of this position, since it’s still just as optimal as it was before the change in market-assessed probabilities. In other words, the Kelly sizing of a binary outcome is invariant to the arrival of new information, so long as that new information does not change the original piece of side information that caused us to put on the bet.

This isn’t just a contrived example I picked to make the math work out, either. Let’s assume that our initial price, prior to the arrival of new information, is p₀, and our payoff is X. We have incremental positive information in the form of a Bayes factor, BF>1, which we use to size our initial bet. Then our initial Kelly fraction is:

Now, new information arrives, the market adjusts its implied probability of the positive outcome3, and the market price moves from p₀ to p₁. Our new portfolio weight is then:

But this is the same value as if we re-derived the optimal weight just using what was known from p₁, without referring to p₀ at all:

Now here’s the kicker. Everything we have done above assumes BF₀ = BF₁, and our estimate doesn’t change over time, but that’s hardly best Bayesian practices- because it’s a very hazy estimate we essentially pulled out of thin air, it should itself be under uncertainty and subject to an updating process with the arrival of new information! If p₁ > p₀, then the price has increased because the market have received confirming evidence, and this confirming evidence means BF₁ should grow towards the upper end of its range as we become more confident we were right. As a result, w₁* > w₁ and we should increase the position size more than it grew in the market. By contrast, if p₁ < p₀, then our old evidence conflicts with the new evidence, and we should tend to become more suspicious we were right in the first place and shrink BF₁ towards the lower end of its range, so w₁* < w₁ and we should cut out position more than it moved down. In other words, the optimal strategy under uncertainly is to momentum trade price movements in the market, rather than fade them and double down on losing trades.

The only circumstance where it’s acceptable to drastically increase your position in the fact of a falling price is if your estimate of BF independently goes way, way up (the clearest example would be if you have material non-public information, of course, but other sources of alternative data only available to a subset of market participants would work too). If you’re stubbornly revising the estimate of your information quality up at the same time everyone is revising theirs down, you would do well to stop and contemplate Cromwell’s Rule: “I beseech you, in the bowels of Christ, think it possible that you may be mistaken”

This, then, is where we end our mathematical journey and return to our original quote. The toy model above, despite its simplicity, nevertheless reproduces the dynamics we see in markets writ large: there’s a few, very specific, cases where it’s acceptable to average down4, but these are rare and obvious when they occur (hint: “I liked the stock and nothing has fundamentally changed” is explicitly not one of them!). The correct advice, given this, is thus to not try it, rather than to establish general rules for safely doing it as your base case. In other words- thousands of words and a dozen equations later, my advice remains… losers average losers. And you, dear reader, are no loser.

Until next time5,

Q.

To head off any criticism at the pass, I am well aware this is not really how early stage biotech stocks trade, but for the purposes of this article we will assume that things like stock issuance, joint ventures and partnerships, executive comp, sector sentiment, trade positioning, net cash, interest rates, etc. are negligible (boy that’s a lot of caveats) and treat it as a pure binary bet that moves only on the expected approval probabilities. Don’t email me to complain about this being unrealistic, I don’t care.

This really shouldn’t have to be said, but if you’re putting 5%+ of your portfolio into a pre-phase 1 biotech in the hopes of 20xing your money in 10 years you’re a lunatic who will lose everything eventually, but! I can’t stress enought that this is just an example.

Note that everything that follows is still true if the price change in the market are driven by changes in X, rather than p- just divide the formulas through by X and you’ll see that the logic holds.

We’ll put these in a footnote here for people who think “yes, it never works out for those people, but it might work out for me!” Off the top of my head, the two primary reasons to fade movements on a position other than aforementioned change in your side information would be because this is in a portfolio of many other bets, and either 1. your other bets have gotten much less attractive on average, requiring you to cut your allocations to them and increasing the relative attractiveness of this one, 2. this bet has attractive negative covariance properties with your other bets, such that it will tend to pay off when you are losing elsewhere and vice versa. The other reason why you might wish to increase a position after a loos is that your initial position was deliberately much smaller than you wanted- a “starter” position rather than a real bet. That’s a bit beyond the scope of this letter, though, so I will ignore it.

Like James Bond, I will return- I actually have two more posts planned to go out in the next two months, one about return prediction in indexes writ large, and one containing my extremely scientific and 100% CFA Institute guaranteed EOY price targets for 2024.

Great, thanks for writing this.

Small typo 0.5/0.95 I think should be 0.05/0.95. I hadn't seen (or at least don't remember) the log odds Bayes' rule and misunderstood it the first time. There is unexpected tempo/complexity jump going from the "trivial" Bayes' rule which is written out in detail, to the more complex log odds version without any exposition.

In the same vein is the simplification of w_0^* supposed to be trivial?

I'm going to stick with the 'Losers average Lovers." sell and move on